Azure AD Domain Services (AADDS) is a way to provide legacy authentication protocols to your Azure Virtual Networks, without having to build AD Domain Controllers or provide any kind of reach back to your existing AD infrastructure. AADDS is a Microsoft-managed deployment of Active Directory, where the customer/tenant is not responsible for management of the underlying compute, storage and networking surrounding the service.

Azure AD Domain Services

provides a one-way synchronization from Azure Active Directory to the managed

domain. In addition, only certain attributes are synchronized down to the

managed domain, along with groups, group memberships, and passwords. The integration

is to the AAD tenant which is already present when AADDS is created from an

Azure subscription.

As this is a managed service, it is expensive and tenants/customers do forfeit a number of control factors which might make using AADDS in a production context a challenge. Many organisations do end up ruling out using AADDS due to its short comings around management. That said, many are using AADDS in production to offer authentication for services such as AVD, which still requires the Session Hosts to be joined to a traditional Active Directory. As all of the objects are pulled from the AAD tenant into AADDS, it is possible to have cloud-only objects synced to AADDS, in which none of the objects which are synced from traditional AD into AAD are then further synced from AAD to AADDS. This is configurable when the AADDS instance is being created, this is highlighted below.

Information: You can only create a single managed domain serviced by Azure AD Domain Services for a single Azure AD directory. This means that each AAD tenant can only have one AADDS domain regardless of how many customers, subscriptions etc are served from that tenant. This is a potential limitation but is impossible to overcome due to the nature of the sync relationship between AAD to AADDS.

Common use cases and patterns to consider AADDS for:

- Legacy authentication is required in a cloud-only environment, when no traditional AD DS exists.

- No cross site connectivity between on premise and Azure is available, this makes extending the existing AD DS to Azure difficult.

- Domain Services are required in an air gapped manner for services such as Remote Desktop Services (RDS) or Azure Virtual Desktop (AVD).

Information: Although

AADDS might read as an extension of your existing directory services, please

note that this is an entirely new AD DS domain. Therefore any application

authentication integrations would have to be reconfigured to trust the new

domain during a migration to Azure. Please do not forget that as this is a new

domain, any AD controls such as groups, service accounts used to control

in-application functionality or permissions would have to be recreated. It is

possible to leverage Group Policy Objects (GPOs) in AADDS.

Azure Portal and search for Azure AD Domain Services (AADDS) and click Create.

On the Basic tab, we must select a Subscription for the AADDS instance. A DNS Primary Name is already required, this is the internal DNS namespace which is used to built and create the new AD DS. The infrastructure to support the AADDS must be hosted within an Azure region, I have selected UK South below. We have also selected the Enterprise SKU.

The Networking tab for AADDS is important as the AADDS service must be joined to a virtual network to ensure applications and services can use the legacy domain. In this example we create a new virtual network and subnet, please note the AADDS service will be created into a new subnet. Nothing else should be deployed into this subnet as it must be exclusively for AADDS. The AADDS will have private addresses within this subnet which are used in the DNS Server configuration to ensure servers and clients can discover the AADDS service/domain.

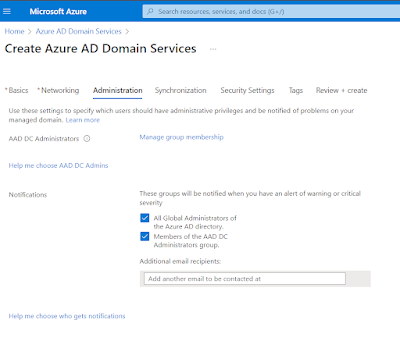

The Administration tab

outlines what existing users and groups within the AAD are granted privileged

access to the AADDS managed domain. Please note you are not granted Domain

Administrator or Enterprise Administrator rights in the managed domain. You

also cannot extend the schema of the Active Directory, therefore limiting the

usage of custom attributes for complex application scenarios. Management of the

AADDS domain is done with the users who are populated within the AAD DC

Administrators group. This group starts out life in the AAD as an assigned

group. You then add members to this AAD group which are then granted management

permissions within the new AADDS domain.

You will notice below the check box for "All Global Administrators of the Azure AD Directory" is selected. This ensures that all Global Admins within the tenant are also given management permissions within the domain, over and above the AAD DC Administrators.

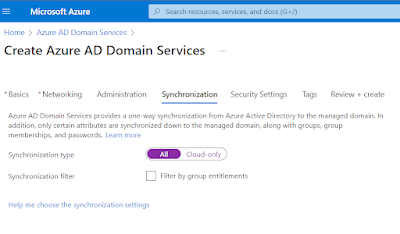

We now configure what objects we would like to sync into the new directory. By default you can configure full sync. This is where objects which originated from on premise AD but have been synced to AAD would then be pushed into the AADDS. The architecture looks something like this, where the on prem AD syncs to AAD via AD Connect, then AADDS syncs from AAD into the managed domain. There would never be a direct link between AADDS and on prem AD.

You can of course select, Cloud-only where only objects which have originated in the AAD tenant are then synced to the AADDS instance, leaving behind anything in AAD which originated from on prem AD.

It is possible to fine tune the security settings for parameters such as TLS, NTLM, Kerberos etc. It is probably only advisable to do this if you have a compelling reason to.

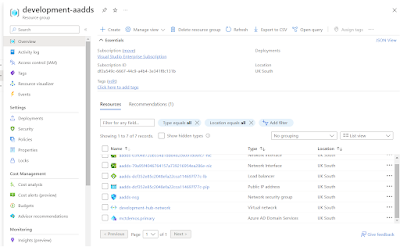

The creation of an AADDS domain takes around 45 minutes so be patient and once the service has completed deploying you will be able to integrate the infrastructure behind the service. You will notice no Virtual Machines are available as part of AADDS, these are entirely abstracted from the customer.

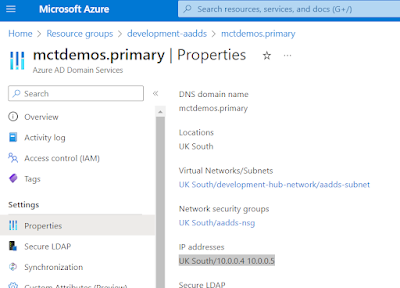

To ensure your new managed domain is reachable you must find which IP addresses have been assigned to the AADDS Domain Controllers underneath the platform. Go to Properties from the AADDS resource object and you will find the addresses there. These are private addresses and we will configure our virtual network to use these addresses as the DNS to ensure the domain can be resolved.

To do this, open up a virtual network and go to DNS Servers, then click on Custom. From here enter the addresses of the AADDS service and commit the change. This will ensure all infrastructure provisioned on this network will automatically be given these DNS addresses which will ensure the new AADDS domain can be resolved. If this is in place you should be able to join a new machine to your new domain.

To manage the domain you should use something like the RSAT tools from a management workstation with the correct credentials. From here you will be able to open the domain tree, Group Policy etc.