The amount of accurate

documentation on how to implement device-certificate based authentication for

Direct Access clients is extremely low. If you’re implementing Direct Access

from scratch I would recommend getting it working with AD credentials only,

then enable device-certificates once you are confident in the general config. I

recently did just this, the Direct Access server and clients were functioning

correctly. The next stage was to enable Computer Certificates from Step 2 – Remote Access Server.

In my lab I only have a single

tier AD CS PKI setup, therefore I selected Use

Computer Certificates but did not

tick Use an Intermediate Certificate. This would be required if you had a

two-tier AD CS PKI.

For clarity at this point you should choose the

certificate that is that is issued by your Certificate Authority. It is unclear

what else is required to make IPsec work correctly.

Once you do the above, and Group

Policy refreshes you will start getting an error about IPsec not working.

“Error: there is no valid certificate to be used by IPsec

which chains to the root/intermediate certificate configured to be used by

IPsec in the DirectAccess configuration.”

“Causes: the certificate has not been installed or is not

valid.”

“Resolution: please ensure that a valid certificate is

present in the machine store and DA server is configured to use the corresponding

root certificate.”

The reason for this error is that a suitable certificate is

not installed on the Direct Access server, this might seem obvious. However, the

configuration step from Direct Access Step

2 – Remote Access Server does not install a certificate to make IPsec work,

it simply points the Direct Access configuration at the PKI to trust for device

certificates.

With that said, you must configure a custom AD CS template with

specific settings to make IPsec work for Direct Access, a certificate from this

template then must be installed on all of the Direct Access servers.

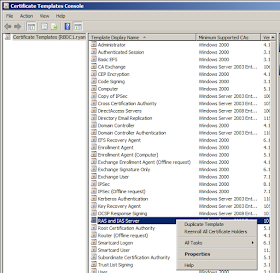

To do this open up Certification Authority and click

Certificate Templates.

Open Manage from Certificate Templates.

Find

the default Certificate Template called RAS and IAS Server, right click it and

select Duplicate Template.

On the General tab give your new template a descriptive name

I also select Publish Certificate in Active Directory.

Click on the Security tab and add the context Domain

Computers and grant the following permissions

Click the Extensions tab and click Application Policies,

then Edit.

Click Add from the Edit Application Policies Extension

window.

Enter a descriptive text string for the new Application

Policy, do not make any alterations to the Object Identifier and click OK.

Do not forget to do a Certificate Template to Issue to

ensure the new template is available for certificate enrolment.

The next step to fix “IPsec is not working properly.” is to

enroll a certificate on each of the Direct Access servers using the new

template. It should be installed under the Computer context under the Personal

store on each of the Direct Access servers.

Once this certificate is installed do a gpupdate /force on

each of the Direct Access servers, the IPsec errors should disappear.